Table 1: Total gowning time per employee versus capacity per area.

Capacity

(no. of people in the space) |

Performance Metrics

(minutes) |

| Locker Room |

Grade D |

Grade C |

Average

Lead Time |

Maximum

Lead Time |

| 8 |

10 |

10 |

17.1 |

20.7 |

| 9 |

5 |

9 |

16.6 |

19.3 |

| 10 |

5 |

9 |

16.3 |

18.4 |

| 10 |

5 |

8 |

16.5 |

18.8 |

| 10 |

4 |

8 |

16.5 |

18.8 |

| 10 |

4 |

7 |

16.9 |

20.2 |

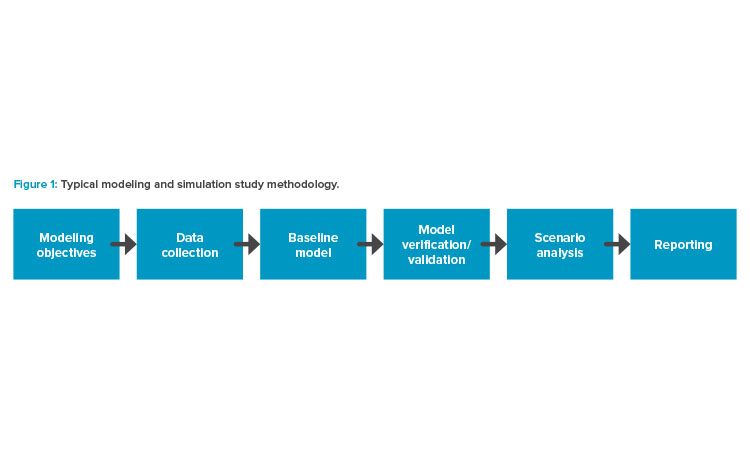

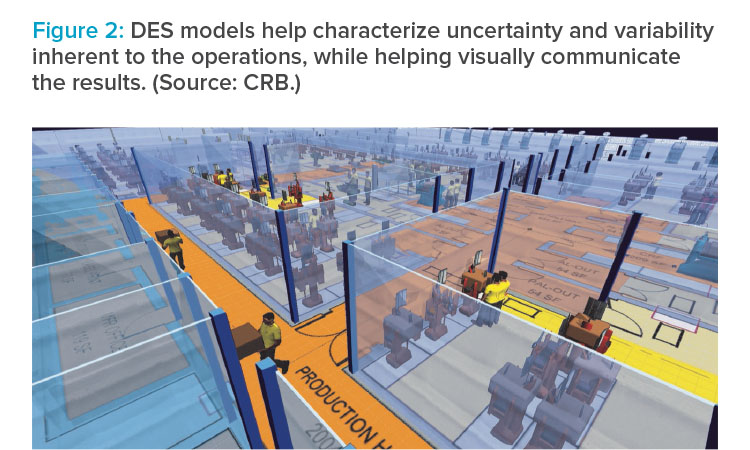

To make the most-effective facility decisions, key workflows and relevant data were collected for manufacturing operations, QC, and supply chain requirements. Assumptions were carefully documented, and a baseline model was developed using these inputs and assumptions. The model was used to make key decisions regarding the number of suites as well as the number of platforms (sets of specialized pieces of equipment and technology) to be installed per manufacturing suite. The platform is considered critical in the value stream based on the cycle time and the equipment cost. Given that the technology is new and not fully vetted, expecting higher production utilizations would also be unrealistic. Time had to be allotted for training and other (unforeseen) events.

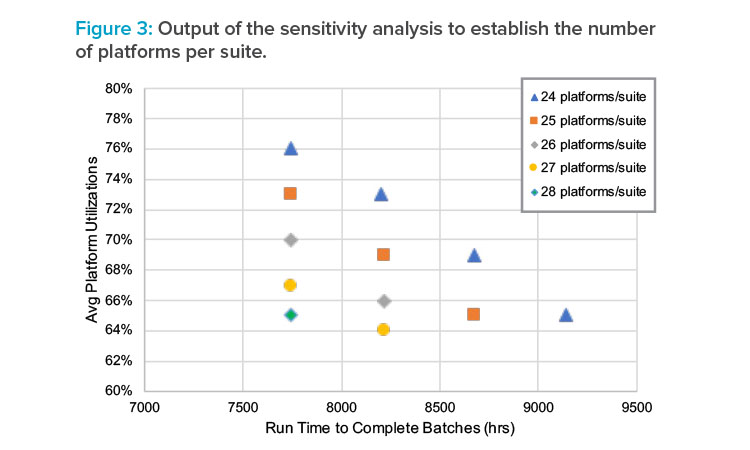

Sensitivity analysis was performed to identify the best combination of acceptable utilization, batch cadence, and quantities of equipment needed to meet the target demands within the given time frame. Figure 3 shows the graphical output of this analysis. The sensitivity analysis revealed that the annual demands cannot be satisfied if the run time exceeds approximately 8,500 hours.

The model was also used to simulate several scenarios, such as simulations to:

- Level load work and understand the extent of cross training required to avoid additional head count

- Assess waste handling strategies

- Justify certain automation in QC to reduce turnaround time, head count, and equipment needs

While the study looked at optimizing equipment and head count, key space types (e.g., gowning areas) were also right-sized. Though gowning is essential, time spent in gowning should be considered as essential non-value-added time, and reducing this time is recommended. However, adding more gowning space and maintaining it can be cost prohibitive. Thus, it is important to strike the right balance between gowning time and the investment and operating expenses for maintaining large gowning spaces. Table 1 shows the results from the simulation analysis that aimed at reducing the overall lead time for operators (i.e., the time spent per operator to change from street clothes to gowning requirements to enter Grade C space) as a function of room occupancy.

Conclusion

Operational simulations are a powerful tool to help estimate the resources required to influence space needs and facility size. Though it is important to study the main production systems, the study should also include support functions, such as QA/QC and warehousing. In addition to equipment, headcount, and space needs, these models can also help right-size intermediate staging spaces, develop waste handling strategies, ensure adequacy of utilities, and so on. Operational simulation studies should be undertaken at the early stages of design.

Because models can only be as robust as the data used to construct them, excellent communication with subject matter experts and accurate documentation of inputs/assumptions are critical components of operational simulation. The right questions must be asked to ensure that the right data are obtained and that the model will address the users’ needs. It is essential to translate and communicate the underlying algorithms in a manner that can be understood by the people providing the data on which the model will be based. Communicating the results generated by a simulation in a manner that the stakeholders and end users understand is equally important.

As mentioned earlier, operational models built using the DES technique help characterize the impact of variability and uncertainty. However, running multiple replications is the key to success with these models. Each replication selects unique values from the statistical distribution, allowing the model to capture the entire range. Selecting the correct number of replications is also important to avoid increasing the overall model run time.

Like quality documents, simulation models should be considered as living documents. These models can also be viewed as digital twins of an actual facility. Before making one or more significant changes to a facility or an operation within it, simulations can be run to determine the impact of the changes and develop strategies to overcome any adverse situations.

Once any change is made to a facility design, it is important to modify the model to reflect that change (i.e., create a new baseline). The simulation can then be rerun to confirm that the desired result was obtained. Updating the model is also essential so that it continues to reflect the current state of the facility. Whenever additional actual data that can inform the model are obtained, the data should be added to the model. This ensures that the model’s performance and prediction accuracy improve. For instance, once the facility has been constructed and is in operation, actual data on the process cycle time for a particular step can be fitted to a probabilistic distribution and used to rerun the analysis.