Current Challenges in Implementing Quality Risk Management

This article was published in the November/December 2016 edition of Pharmaceutical Engineering® magazine. It is one of five articles nominated for the Roger F. Sherwood Article of the Year Award, all which will be posted to iSpeak throughout the week of 5 December.

The views expressed in this paper are those of the authors and should not be taken to represent the views of the Health Products Regulatory Authority.

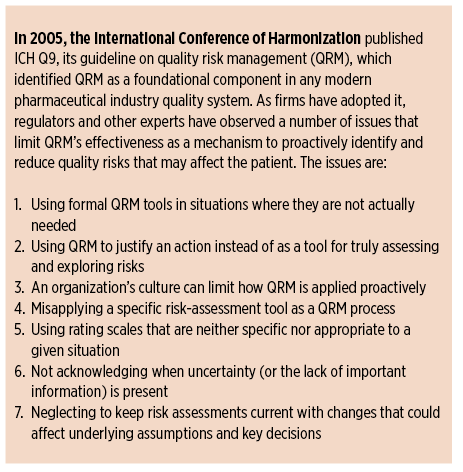

Since quality risk management (QRM) was formally introduced to the pharmaceutical industry in 2005 with the publication of the International Conference on Harmonisation (ICH) Q9 guideline on quality risk management,1 pharmaceutical firms have adopted and implemented its concepts, tools, and methods in different ways and at different rates. As a consultant/trainer and an inspector at a national regulatory agency, we have independently visited or inspected a variety of companies in North America, Europe, the Middle East, and Asia; we have listened to presentations at technical conferences; and talked with QRM practitioners. Through these experiences, we have observed several recurring themes that appear to be common industry challenges. This paper identifies and discusses seven of them; they are given with the intent of stimulating a discussion on the implementation and use of QRM and the difficulties that are currently seen.

1. Using formal QRM on everything

Management will sometimes ask that a formal QRM process—i.e., using recognized tools like failure mode effects analysis (FMEA) or fault-tree analysis (FTA)—be performed on issues ranging from the critical to the trivial. This broad misapplication of the QRM process has caught the attention of good manufacturing practice (GMP) inspectors who now see formal QRM reports addressing issues that in the past would have been decided simply on the basis of key GMP requirements that were well understood by management.2 Using a risk-assessment tool to “justify” the release of a batch of product following a serious contamination incident, for instance, without reprocessing or reworking that batch now appears to be occurring more frequently than in the past, with highly questionable batch release decisions in some cases. It is often better to focus efforts on root cause analysis and to take appropriate corrective and preventive actions (CAPAs) without overreliance on subjective risk assessments that could lead to the conclusion that the risk is low and that no actual CAPAs are needed. With a solid root cause analysis and a good understanding of the likely impact of a problem issue (on batch quality and to patients) in place, these approaches can often be more timely and effective than moving through all of the QRM phases. Formal risk assessments sometimes fail to add value or clarity to a situation because risk assessments often only superficially address root cause analysis, resulting in ineffective risk-control actions. In addition, because risk assessments are often performed by busy people, the results are often not as supported by scientific rigor as they should be. This can lead to high levels of subjectivity and uncertainty in outputs and conclusions, which further contribute to risk. Consider, for example, a case involving a potential product contamination incident at an active pharmaceutical ingredient (API) site. At the end of a production campaign, a metal mesh screen used in one of the final steps was found to have broken; a large section of the mesh material was torn from its housing. Some batches from the campaign had already been released and shipped to a drug product manufacturing site, while batches still at the API site were given a “hold” status and placed into quarantine. In response to the problem, several actions were taken:

- A deviation was raised to investigate the issue, coupled with a formal risk assessment exercise (using FMEA) to decide whether to release the remaining batches.

- The risk assessment found that the screen break presented a low risk of batch contamination.

- This conclusion was used to justify the release of the quarantined API batches to a drug product manufacturing site.

Later, during a regulatory inspection, the inspector reviewed the QRM exercise. Significant problems were found, including customer complaints citing metal in the API. This cast considerable doubt on the validity of the QRM outcomes and the resulting batch release decisions. For example:

- Because the empirical evidence gained from the deviation investigation was misinterpreted, scientifically unsound likelihood estimates were made that significantly affected the risk assessment outcomes. The company, for example, maintained that the mesh section had remained intact despite being dislodged, and assigned a low likelihood rating that metal fragments had entered any API material. But the evidence pointed to the contrary—a piece of mesh wire had broken off when the failed screen was examined while being washed.

- There was no documented rationale as to why a piece of 316 stainless steel up to 850μm in length in a tablet represented a hazard with a medium severity rating and not a high severity rating, nor was there a documented clinical assessment of potential contamination and its risk to patients.

- The company relied on controls and risk mitigation assumed to be in place at the drug product manufacturer to detect metal in the formulated tablets, and paid inadequate attention to detecting and removing metal fragments from the API lots of concern while they were still on-site. This was not considered an acceptable approach, as it meant that the risk of releasing contaminated API to the drug product site had not been adequately assessed.

Providing a subjectively derived risk score to a manager who may not know how to interpret it correctly (e.g., a risk score indicating a low likelihood of occurrence but concerning an issue of potential patient impact and harm) may result in a decision that runs counter to GMP principles.

As we noted before, some organizations incorporate formal quality risk management in all such instances, but this is not actually a GMP requirement. Applying formal QRM “for everything” is at variance with ICH Q9 (section 1, page 2), which states, “The level of effort, formality and documentation of the quality risk management process should be commensurate with the level of risk.” Additionally, some quality decisions should be so obvious that conducting an extensive risk assessment to support decision making is not necessary. The above example showed how an improperly performed risk assessment can adversely affect batch release decision-making. (For a detailed review of inspectional issues in relation to improperly performed risk assessments, see Waldron, Greene, and Calnan.3) In this case, one has to ask whether the firm’s management should even have requested a formal risk assessment to determine if the API lots in question should be released without reprocessing or reworking them. Even if the intent of such a risk assessment was truly focused on understanding the risks presented by a broken screen, the subjective nature of the risk-scoring approach and the potential implications for patients seem to have been overlooked. Again, from the Q9 guideline (section 1, page 6): “Appropriate use of quality risk management [which in this case, we posit, was inadequately performed] can facilitate but does not obviate industry’s obligation to comply with regulatory requirements.” In this case, a key regulatory requirement was to not release contaminated API batches to a drug product manufacturing site.

2. Risk assessment to justify a decision, not assess risk

The intent of QRM is to make data-driven and scientifically sound decisions proactively, not to justify an action or a decision that has already been taken. The outcome of a risk assessment may, of course, support an action, but there should be a logical, fact-grounded rationale to defend what is done. Considering risks should be a thoughtful inquiry instead of a biased vindication. Our experience shows that when risk assessments fall into this category, they sometimes lead to risk treatments that, when challenged by regulatory inspectors during inspections, do not withstand any level of scrutiny. In these cases, decisions are often not aligned with facts or the way that the risk question was explored. “Change control” is a quality system element used by some organizations in which QRM is used to justify the proposed plan asking “What could go wrong?” and then devising a control strategy for that possibility. Consider a case where QRM was performed in conjunction with a proposal at a drug product facility to revise (i.e., mainly reduce) calibration frequencies for instruments and other measuring devices at the site.

- In the risk assessment, the proposed reduced calibration frequencies identified two unacceptable risks. These were considered mitigated by three types of currently in-place detection-related controls: daily verification checks, in-process controls, and finished product testing.

- While to some the risk-reduction strategy may have seemed adequate, when the details were examined on inspection, it became evident that many types of instruments and equipment items at the site (including those deemed critical—e.g., pressure transmitters and in-line temperature probes) were not required by procedure to have any kind of daily verification checks performed, and the validity of this type of control for the risk in question was highly questionable.

- It was not clear how the two other types of documented risk-mitigating controls—in-process controls and finished product testing—could lead to the timely detection of process variation due to out-of-calibration instruments and other equipment items.

- Closer examination revealed that the risk-assessment part of the change control could actually assign the same 12-month default calibration frequency to both GMP-critical and GMP-noncritical instruments. There was no documented explanation to justify the practice, even though inspectors often want to know the basis or rationale for a decision.

Given the nature of the deficiencies seen with this application of QRM, it was clear that a true sense of inquiry and objectivity were lacking in the risk-assessment process to evaluate the proposed change; the emphasis seemed more on justifying the change control proposal. While Q9 does use the word “justify” in its guidance (though only twice in Annex II of the guideline), the emphasis of risk assessment in Q9 is more on “evaluate” or “determine,” terms that are used multiple times. This points to the importance of analysis over defending a particular position or proposal.

3. Reactive “firefighting” instead of proactive QRM

Some companies take pride in their ability to react quickly to quality incidents. Their organizational culture rewards individuals and teams who show extra effort in solving difficult technical and operational problems. These so-called heroes are fully focused on the mission at hand—solving the problem—and they receive increased recognition and praise in return. The problem with this model is that the work and effort being highlighted are usually unsustainable in the long term, as they can be very labor intensive. Also, the “we-can-fix-it” attitude often precludes the development of more proactive systems.5,6, 8Unfortunately, these organizations do not give the same visibility to those who are the fire preventers—the people who take proactive steps so that the problems do not occur in the first place. During a month-long holiday shut down, for example, an injectable product manufacturer expanded the scope of work to include digging through a hallway floor and into soil to replace a sewer line.

Since the hallway was adjacent to and outside of the graded areas (A/B and C/D), engineering staff felt that no proactive change control was necessary. Unfortunately, mold and spores made it through the inadequate containment controls and into the aseptic manufacturing area. Multiple failures to bring the area back into production required extensive investigation and remediation, and experts from other corporate sites were brought in to help. When the area finally was back on-stream (four months later than planned), all involved went out for a celebratory dinner paid for by management in recognition of their accomplishment. If before the digging started someone had asked a simple risk questions such as, “What might go wrong if we go ahead with this plan?” or “To what hazards might our production facility be exposed?” effective precautions might have been taken. Regrettably, those who proactively ask these “what-if” questions are not always recognized for anticipating unwanted situations. ICH Q9 (section 1, page 1) is useful in this area: It highlights the proactive nature of QRM: “An effective quality risk management approach can further ensure the high quality of the drug (medicinal) product to the patient by providing a proactive means to identify and control potential quality issues during development and manufacturing.”

4. Equating FMEA with QRM

A 2006 PDA survey9 found that FMEA was the most widely applied tool for assessing change controls and for adverse event, complaint, or failure investigations at sites. Observations and anecdotal evidence suggest that this is still true. While FMEA is useful and versatile, some organizations consider it a complete QRM tool in itself. This is in part because typical FMEA worksheets include columns for risk treatment items (i.e., risk-reducing actions) and a reassessment of the risk priority numbers (RPNs). This approach essentially leaves out the third and fourth elements: risk communication and risk review. Despite this limitation, many companies still rely on FMEA as their overall approach to QRM. Some firms have not yet seen that hazard identification and risk assessment tools can be used together synergistically. For example, a high-level preliminary risk assessment might be used first, followed by FMEA on particular parts of a drug container’s design. FTA—a more detailed deductive tool—would be appropriate in understanding the causes of key failure modes. QRM does not necessarily need a complicated and complex process to be effective.

For example, one company decided to completely redesign the packaging used for many of its products to ensure a common brand livery (identity). Within weeks of the new product pack launch, complaints from pharmacists began to arrive, pointing out the risks of dispensing errors because of the loss of adequate differentiation between the rebranded products (and between strengths of the same products). Had someone adequately considered the simple risk question of “What might go wrong if we implement this packaging design change?” such problems might have been avoided. Sometimes those assessing risks using an FMEA get so lost in the details that they lose perspective on the larger risk picture. A review of a QRM report for an API process showed that a firm’s development engineers had performed a very detailed well-executed FMEA where the highest risks related to charging particular chemicals into the process and maintaining narrow temperature controls. When asked about the five most serious risks in manufacturing the API, however (not just the specific process operations), or what the technical staff worried about at night, the risk-assessment team members could not come up with a response. It was clear that they did not have the bigger risk picture in mind. Even when considering the flexibility and usefulness of FMEA as a tool only for risk assessment and control, it is still relatively weak in these areas. FMEA in and of itself offers only a superficial approach to root cause analysis for failure modes, and it provides few, if any, strategies for limiting how much subjectivity may be associated with its RPN outputs.

Despite several very useful publications on the limitations of FMEA and its RPN-based approach to risk prioritization (see, for example, Schmidt12), the industry continues to place a high reliance on FMEA as a primary tool for conducting QRM. Some FMEA limitations can be overcome by using other tools in a synergistic manner. When identifying the potential root causes of failure modes in an FMEA, for example, a number of other tools can be used, such as Ishakawa (root cause) analysis, the “Five Whys” tool, FTA, and event-tree analysis,10,11 a tool has found acceptance in the aeronautics industry, but is less used in the current GMP environment.

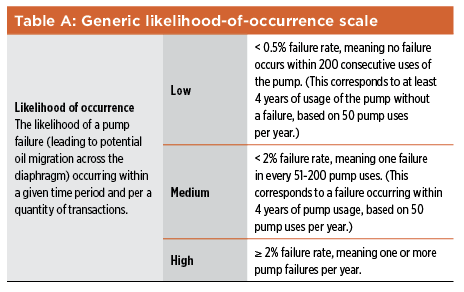

5. Inappropriate rating scales

Rating scales are critical to accurate and consistent descriptions of hazard likelihood, impact, and detectability. Scale-based values are assigned during risk assessment and then used during risk evaluation when determining which risks are to be addressed and reduced. Experience has shown, however, that creating and using rating scales properly are two of the most challenging aspects when implementing a QRM program.7 Firms use a variety of scales for rating occurrence, severity, and detection, with some more reasonable and defendable than others: 1–3, 1–5, or 1–10 are used frequently. Some scales rate severity numerically, as 1, 3, 5, 7, 10, 20, and 40. A rating of 20, for example, means that there may be one fatality due to the drug; 40 may mean that multiple fatalities could be involved. When one firm was asked about its unique rating scale for detectability, they said that it came from an outside consulting firm; no one inside the company could fully explain its logic. Generally, two different approaches to scale development are used in the industry. The most common is to apply the same scale (particularly the scale for likelihood or probability of occurrence) to all risk assessments, regardless of the nature of the individual situation, product, or process that is assessed. Firms (and some regulatory agencies) like this approach because the risk ratings may then be compared across products, processes, departments, and even sites. One limitation of this approach is that while the probability of occurrence scale may be entirely appropriate for one manufacturing process or product, it may be entirely out of range for others.

Another is that using the same scale in all risk assessments may place too much emphasis on the final RPNs. This is a problem because directly comparing RPNs from a range of risk assessments fails to recognize that RPNs are derived from ordinal number scales; multiplying ordinal numbers to generate RPNs has questionable mathematical validity. The second approach to using likelihood scales is to use available information, as limited or as imperfect as it may be, to develop a customized scale for a particular risk assessment. A scale could be based on data from 50 small-scale lots of a drug substance produced in the last two years as part of product development work, for example. A 10-point scale based on available data created here could connote much more precision than the actual experience base provides; a three-point scale may be more appropriate in that situation. If, on the other hand, the process for manufacturing this drug substance was similar to that used for other products at the site (e.g., a common fermentation process, or a particular chemical synthesis pathway), using that knowledge could be very appropriate when developing an occurrence scale.

This could result in a much larger experience set. Several firms that we have recently visited or inspected have developed likelihood and severity scales that use the same ordinal number ranges (1–5) or the same levels (e.g., the levels for severity may range from negligible to serious), but they also have keywords or definitions assigned to each part of the scale that must be considered in a risk-assessment exercise. In a 1–5 ordinal number severity scale, for example, there may be five degrees of patient-related impacts, a set of keywords describing five different degrees of GMP noncompliance, and other sets of keywords relating to drug availability or hazards affecting critical quality attributes, etc. So instead of simply assigning a severity rating based on the high-level names associated with the available severity levels (e.g. level 2: low severity), the risk-assessment team must consider specific keywords that define each level to arrive at a severity rating for that hazard or failure mode. This helps assign severity ratings in a more consistent and less biased manner. A similar approach can be taken when customizing likelihood-of-occurrence scales for individual risk assessments. Scales can have keywords and definitions that relate to occurrences per unit of time, such as one event or fewer in five years, one or more events every week, or numbers of batches, numbers of units produced, (e.g., tablets, vials), etc.

In addition to having scales customized for a specific risk-assessment exercise, it is also important for the QRM team to document its rationale for making key decisions, such as the construction of the scale, the selection of a particular category, and the like. This can help support the ratings that are assigned, and can be a useful source of information for those reviewing the risk-assessment exercise in the future.

6. Introducing uncertainty via subjectivity

Risk increases as uncertainty increases. The ISO 31000 standard, published in 2009, defines risk (13, page 9) as the “effect of uncertainty on [achieving one’s] objectives.” Uncertainty can be due to a number of different factors, such as lack of information about or limited experience with a process or material during the early stages of process development. Uncertainty can also be present when options to detect a particular hazard are lacking or the detection methods are not used. Acknowledging that uncertainty is present or that you do not know something are two of several ways to respond. Subjectivity in risk assessment work is another important bias that should be addressed.14 Subjectivity can be the result of differences in perceptions, stakeholder values and experiences, and other factors. ICH Q9 discusses the difficulty of achieving a shared understanding of the application of risk management because each stakeholder might:

- Perceive different potential harms

- Place a different probability on each harm occurring

- Attribute different severities to each harm

Subjectivity can be compounded by groupthink as part of brainstorming activities—during hazard identification steps, for example, and when probability ratings are being assigned. In addition, a lack of diversity in risk-assessment teams can limit the breadth and effectiveness of risk-assessment exercises. Subjectivity can have other negative effects, as well. As discussed in ISO 31000,13 stakeholders form judgments about risks based on differences in values, needs, assumptions, concerns, etc. As a result, it can be difficult to reach agreement on the acceptability of a particular risk, or on the suitability of the course of action proposed to address that risk. This is not to say that subjectivity should be banished from discussions on risk. Without the critical analysis of alternative viewpoints, groupthink can blind team members to significant risks. Subjectivity is not just how we perceive and discuss risks—it can also be a consequence of the scoring method used to estimate the risk. In the likelihood-of-occurrence scale shown in Table A, the phrases “very unlikely,” “unlikely,” and “very possible” are used to indicate “low,” “medium,” and “high.” This represents another way to assess the likelihood of occurrence (instead of the quantity of transactions, also provided for in the table—one per hundred, etc.). If the quantitative aspect of the occurrence scale were not there, however (as is often the case), the scale would provide no guidance to what these phrases mean, especially in the context of the risk-estimation exercise in question, introducing subjectivity into the likelihood-of-occurrence ratings.

(Research has found that different people interpret phrases such as these in very different ways—see Budescu, Por, and Broomwell for a useful research study in this regard.15) A number of recommendations for addressing the problems of subjectivity and uncertainty during risk-assessment activities are presented in previous publications by the authors.2,4,10 Some useful strategies include:

- Ensure that QRM teams have a facilitator who knows about factors that can influence risk perception, and about these problems that can arise as a result of human heuristics, particularly during brainstorming sessions. This can help achieve more science-based likelihood-of-occurrence estimates and severity ratings for hazards that are not adversely influenced by risk perception factors.

- Ensure that risk-assessment teams are sufficiently diverse can help with failure mode identification activities, and when risk control proposals are being discussed and determined. Inviting someone onto the team with a different point of view to challenge what has been proposed13 to also be of value.

- Use key words in scales to identify levels of severity, likelihood, and detectability.

- Acknowledge that uncertainty is present during risk analysis. Useful strategies include documenting any pertinent assumptions made during the risk assessment in the risk-assessment report, and the likely range of any risk ratings (or RPNs) considered especially difficult to assess. Addressing such ranges is not unlike the approach used by storm forecasters for tropical storm predictions.

- Realize that you may know more than you think, and source the data to support that knowledge.11

- Build good science into all risk assessments by ensuring that validated data, wherever available, are given prominence over the unsupported opinions of those who may speak the loudest in risk-assessment teams. Another simple strategy is to design the risk-assessment tool to ensure the following: Before any probability, severity, or detection ratings are assigned to failure modes or hazards, the current GMP controls that may help prevent, detect, and reduce the potential effects of those failure modes or hazards should be formally documented and assessed.

7. No meaningful risk reviews, or “once and we’re done”

Many QRM models include a recurring loop for review and monitoring; often, however, this step is disregarded.16 When reviewing a risk assessment, the assumptions, decisions, and actions made in the original risk assessment can be compared to the current situation. Ongoing monitoring activities are also important, as they can identify situations or changes that could affect the original risk assessment and the decisions made. Companies often ask “How frequently should risk-review exercises be performed?” The answer depends on various factors, including, as Q9 (1, p. 5) states, “the level of risk that was originally determined in the risk assessment.” Other useful factors to consider are:

- How much new knowledge and experience has been gained with the process of concern?

- How much uncertainty was associated with the probability estimates and with the identification of failure modes last time?

- How much has the process changed since the original risk assessment was performed?

Some risk reviews may be coupled with annual product reviews (APRs). We think this is a useful strategy and one that can make best use of the extensive data compiled for APRs. It can also be useful if clear risk-review instructions are prescribed in the risk team report (e.g., “Please review the effectiveness of the detection control for Failure Mode 5, as we relied on that control a lot when assigning the low risk rating there”). Doing this recognizes that the risk team members will usually have good insight into any problems and assumptions that arose, and they should be familiar with how dynamic (or static) the situation was, and is. Regardless of when the risk reviews are performed, it is important that reviewers have access to the original risk team’s key recommendations; these should be documented, together with information on the rationales behind key risk ratings. If there were significant uncertainty in a likelihood-of-occurrence estimate during the original risk-assessment exercise, for example, the team should document the need to reexamine this more carefully during the review exercise, taking into account certain types of information that should, by then, be available to better inform that estimate.

Conclusion

As pharma and biopharma firms continue to apply QRM, many are integrating risk-based thinking into their quality systems and making increased use of risk assessment and related tools. Teams that perform QRM activities are also becoming more competent as they develop knowledge and skills through experience. At the same time, however, several issues continue to exist across the industry; failing to address these will diminish the value gained from the industry’s QRM work. Some firms use formalized risk assessment tools inappropriately, especially when the GMPs for regulatory expectations indicate the path forward is clear. In other cases, organizations unintentionally reward reactive QRM and “firefighting” over prevention and proactivity, because their cultures are rooted and experienced in managing crises. Other issues include:

- Poorly defined and irrelevant rating scales are sometimes used in risk-assessment exercises, producing outcomes with high levels of subjectivity.

- Risk-communication and risk-review activities are either not performed or are performed only as an afterthought, providing little added value.

- The rationale for key decisions is lost or not properly documented. As a result, it can be unclear during risk-review activities why the original risk assessment team made the decisions it did.

The underlying intent of QRM is not simply to identify a risk score for a hazard or create a plan to reduce that risk; rather, it is to bring together knowledgeable people from different disciplines with various informed perspectives who can analyze, anticipate, and prevent potential problems. Not only should this help ensure safe, pure, and available medicines for the patients that need them, it should contribute to a richer and more robust understanding of products and processes within the organization. By: James Vesper and Keven O’Donnell

About the Authors

James Vesper, founder and president of LearningPlus, Inc., has more than 35 years’ experience in the pharmaceutical industry. Since 1991 he and his firm have worked with pharma/biopharma, device, and blood products organizations around the world on performance solutions and custom learning events; he has also been a consultant to the World Health Organization’s Vaccine Quality Network–Global Learning Opportunities. He has a BS degree in biology from Wheaton College, Wheaton, Illinois, a master’s degree public health from the University of Michigan School of Public Health, Ann Arbor, Michigan, and a PhD in education from Murdoch University, Perth, Australia. He has been an ISPE member since 2004.

Kevin O’Donnell is Market Compliance Manager at the Health Products Regulatory Authority in Ireland. In this role he is responsible for a number of compliance-related programs, such as the Quality Defects & Recall program and the agency’s Sampling & Analysis activities. Kevin is also a Senior GMP Inspector at the HPRA, and is currently chair of the PIC/S Expert Circle on Quality Risk Management. He earned a BS in chemistry and mathematics from University College Galway, Ireland, in 1991, followed by an MS in pharmaceutical quality assurance in 2002 and a PhD in quality risk management in 2008, both from Dublin Institute of Technology, Dublin, Ireland. Kevin has been an ISPE member since 2004.