Supporting Pharma Innovation Through Analytics

Example case studies show how the evolution of advanced analytics enables innovation in pharma.

Ensuring delivery of effective medicines requires an agile business strategy effectively utilizing technology to:

- shorten process development times from lab to commercial launch,

- establish streamlined facilities with emphasis on sustainability and flexibility,

- achieve robust quality through utilization of control and predictive capabilities,

- ensure a well-defined process operational space, and

- provide batch size flexibility to meet ever-changing market demands.

The 2020 ISPE Annual Meeting and Expo in 1-4 November 2020 will focus on pharma colleagues sharing real-world examples via a series of educational tracks demonstrating the use of technology and process innovation to drive towards desired business outcomes. The traces are:

- Process Development & Manufacturing

- Facilities & Equipment

- Supply Chain, Operations & Packaging Excellence

- Information Systems

- Quality Systems & Regulatory

- Cutting-Edge Industry Innovations

Pharma and other industries have adopted many enabling technologies over the years, with a proven track record of providing quality products and developing more robust manufacturing practices. But other industries, such as chemicals and food & beverage, have gone further. They have reaped the benefits of other innovative efforts like implementing fully continuous manufacturing strategies utilizing targeted process analytical technology (PAT) in concert with data analytics applications.

In recent years, pharma has shown significant progress along these lines by utilizing available technologies to obtain the required process and product metrics, storing this valuable data, and then accessing it through advanced analytics applications for near real-time decision making by subject matter experts (SMEs).

This blog post focuses on several real-world case study examples where this was done using advanced analytics to closely coordinate subject matter expert expertise with computing power for driving the desired business outcomes listed above.

The Value of Data and Advanced Analytics Applications

Why is ensuring connectivity to data and the corresponding use of advanced analytics so crucial for pharma? One reason is compliance with FDA guidance on process validation, defining it ‘as the collection and evaluation of data, from the process design stage through commercial production, which establishes scientific evidence that a process is capable of consistently delivering quality product.1 As an example, a single continuous manufacturing campaign making several million tablets may have tens of thousands of data points generated within relatively short time periods. This reality cannot be adequately supported by the old era of waiting for someone to find and manually supply data to the subject matter expert, much less the reliance on pen and paper or spreadsheets to support the required near real-time decision making and longer-term knowledge management.

Within this new reality, whether batch or continuous, managing the process validation life cycle is important, with emphasis on three phases: process design, process qualification, and continued process verification (CPV ). At its core, continued process verification includes a comprehensive view of parameters and attributes that must be regularly observed and controlled during the lifecycle of the GMP manufacturing process.

As just one example, continued process verification in the context of the magnitude of data sources from a continuous process is both resource- and time-intensive. Data historians can provide data storage, but advanced analytics applications must be utilized to visualize data, and to perform diagnostics, predictive, and prescriptive analysis. These applications enable more efficient identification of data trends and understanding of process variability, thus allowing the necessary modifications to ensure process control during commercial manufacture. Successful implementation of continued process verification not only ensures compliance with regulatory authorities, but also ensures higher throughput at target quality levels due to mitigated product losses.

Related to continued process verification, demonstrating process understanding and ability to monitor trends related to long-term reliable operation requires statistical process control (SPC ) and statistical process monitoring (SPM). Whether batch or continuous, implementation of these statistical processes must have a strong foundation of science and risk-based analysis. This is a key component for utilizing expertise within an organization, which can then be leveraged to identify critical aspects of the process, implement reliable process metrics, and establish relationships between a robust process and quality products.

The following case study examples illustrate the value created by this new era of advanced analytics.

Utilizing Statistical Process Control in Process Monitoring and Verification

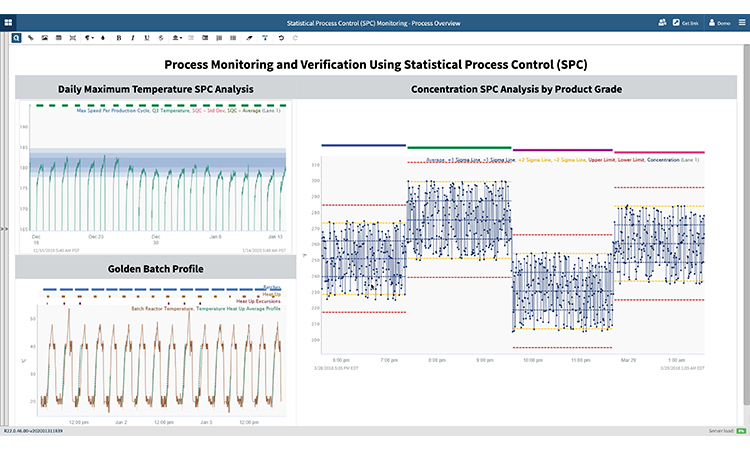

The International Society of Automation standard for batch control (ISA-88) is important when implementing procedure monitoring as part of an statistical process monitoring strategy. In this case study, an subject matter expert summarizes batch processes by aggregating metrics around process duration. Analysis steps include identifying downtime within and between batch procedures and creating key process indicators (KPIs) for duration of operations, downtime, and batch ID. Additionally, the subject matter expert can aggregate downtime to show daily summary of procedure performance.

In this example, the subject matter expert utilizes metadata about the batch, process data, product tracking data, quality analysis data, and batch event data. The subject matter expert uses procedure monitoring to track critical process parameters, or recurring issues within boundaries. Boundaries are calculated using statistical process control rules for continuous processes or created around a golden reference profile for batch processes.

A similar advanced analytics approach is used to identify a golden batch reference profile through several steps, including the determination of ideal process segment(s) for calculating statistical process control limits, setting boundaries, and monitoring variables to identify deviations. The outputs of this analysis provide a strategy for monitoring various product grades, along with the golden batch reference profile used to monitor batch reactor temperature during the heat up phase.

Cycle Time Optimization

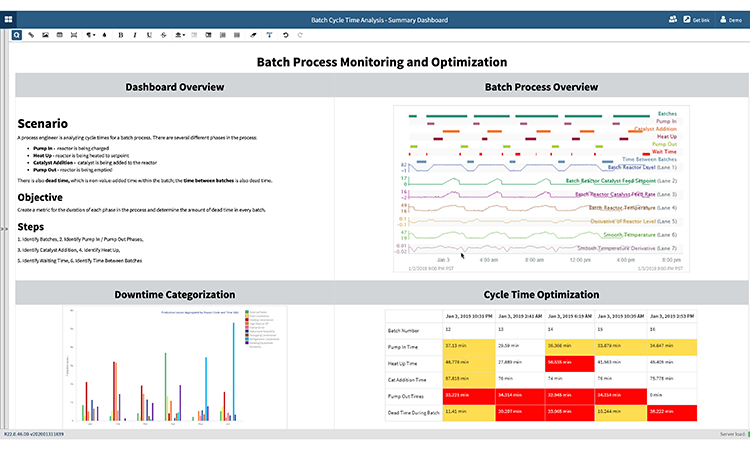

In this example, batch process optimization relies on the subject matter expert to quickly analyze the upstream manufacture of a therapeutic protein within a bioreactor, or an active pharmaceutical ingredient (API) within a crystallizer. Cycle time analysis is frequently used for batch API reaction processes, and for phases including reactor charging, heating, catalyst addition, and reactor emptying. Dead time, the non-value-added time within or between batches, must also be analyzed and reduced.

The value created by cycle time improvements is significant, but these types of batch processes are typically difficult to analyze due to the number and complexity of phases within the process. Monitoring the duration and variability of these phases, as well as downtime within and between batches, is tedious and requires constant updating of spreadsheets as new batches are produced. To perform cycle time analysis, subject matter experts typically manually sort through a stack of historical batches in spreadsheets to determine the metrics related to length and variability of phases within the processes.

Advanced analytics helps subject matter experts by providing near real-time metrics for the duration of each phase in the process, allowing them to determine the amount of dead time in every batch. For each process, key outputs can include the specific batch process overview, a site tree map overview highlighting reactor status, cycle time optimization metrics, and downtime categorization—all as shown below.

Quality-by-Design in Continuous Manufacturing

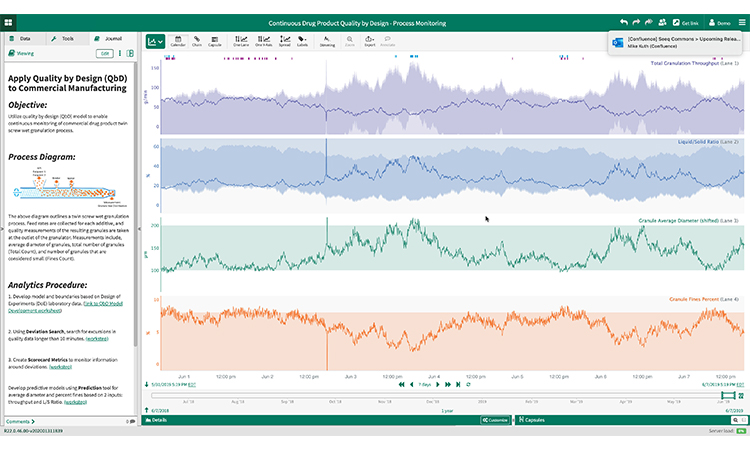

Continuous manufacturing shows promise for providing better product quality and increased yield and throughput while reducing cost. To establish a robust continuous manufacturing process in this example, the subject matter expert utilizes a design of experiments approach to create a multivariate quality-by-design model. As an example, producing a pharmaceutical drug product via a twin-screw wet granulation process requires tracking feed rates for each additive, and quality measurements of the characterization data about the resulting granules measured at the granulator outlet.

Using an advanced analytics approach, an subject matter expert directly connects to these disparate datasets and performs analytics across multiple phases within the wet granulation process. The resulting models and boundaries are then used for continuous monitoring of key process indicators in near-real-time to support these efforts (e.g., Total granulation throughput, liquid/solid ratio, granule average diameter, % fines).

Summary

In the past several decades, industry has moved away from a time when pencil and paper and slide rules were state of the art. Over time, technology innovations ushered in a new era of big data, creating complexity around managing data and performing analytics. These changes created a few unwanted side effects related to the challenge of finding actionable insights.

These challenges are being addressed with the innovations around process analytical technology, along with the understanding, development, and implementation of Pharma 4.0, data storage, and advanced analytics applications to provide key metrics and directly connect subject matter experts to data. Further, collaboration between engineers and data scientists, aided by advanced analytics, is helping pharma manufacturers leverage the breadth of talent in their organizations.

This new era for reconnecting the subject matter experts with the data via advanced analytics could not have come at a better time. Innovations in manufacturing and technology are generating significantly more data to analyze in a fraction of the time. Increased market demand to address drug shortages and cost pressures have introduced daunting challenges. This new era of data storage and access, coupled with analytics technology, is enabling the direct connectivity of human expertise and machine learning capability. This new era is our opportunity to create an agile environment for finding insights to improve manufacturing processes, and to easily share these insights with other employees and regulators to meet the needs of patients safely and cost effectively.

Check out the 2020 ISPE Annual Meeting & Expo to get a glimpse of what we have in store.