Introduction to Data Integrity & Validation for the UK Pharmaceutical Industry

The issue of data integrity may appear archaic in a highly connected, digital world. Yet many within the pharmaceutical industry it continues – be it intentional or not – to fall foul of regulators tasked with auditing businesses’ practices. Peter Cusworth at Yokogawa examines an age-old problem, its importance, and why organisations continue to get it wrong.

According to the Office for National Statistics, 610 organisations operated in the UK pharmaceutical sector in 2018. These organisations generate approximately £21 billion in market value and employ 63,000 persons.1 Based on historical trends, these numbers are likely to grow with an increase in manufacturing investment.

The sector growth will drive the need for robust data integrity and validation processes. First, to assure pharmaceuticals are produced to the highest quality standards; second, to satisfy regulators who have issued warning letters and fines for poor practice in recent years. A sector analysis report from Deloitte, for example, reports that the FDA issued 75 warning letters in 2016, of which 43% contained instances of data integrity violations.2

What is Data Integrity?

Regulatory bodies that issue guidelines for manufacturers define data integrity as the extent to which information is complete, consistent, and accurate throughout the data lifecycle. This includes all original records and true copies, metadata, and all subsequent transformations and reports.

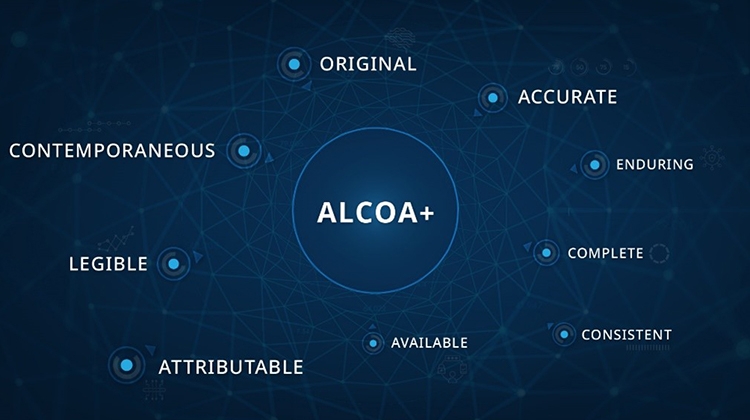

Auditors expect both paper and electronic records to be collected and maintained securely throughout the entire production process. This includes testing, licensing, manufacturing, packaging, distribution, and monitoring. Regulatory requirements are captured in the acronym ALCOA, which states that all data must be attributable, legible, contemporaneous, original, and accurate. ALCOA+ also implies that data must be complete, consistent, enduring, and available at all times, restating the need for effective technology to record, store, and retrieve information when requested.

Why is Data Integrity Important?

Data integrity is essential for most day-to-day business processes, but its importance is critical when developing and manufacturing pharmaceuticals. Drug developers and manufacturers need accurate, reliable data to maintain the efficacy and quality of a product. Auditors cannot always be on-site to oversee every processing and production stage, so checks and balances are necessary to protect patient safety.

Good data integrity processes ultimately build trust between industry and regulatory bodies while also helping to limit the chances of product recalls, compliance issues, and damage to a business’s reputation. This idea is best seen in a lawsuit from 2018, which saw Fresenius SE cancelling its $4.3 billion acquisition of US drugmaker Akorn Inc. Fresenius claims that Akorn did not have sufficient controls in place to guarantee the reliability and validity of data gathered during process development for one of its products. Once a judge ruled that Fresenius’s withdrawal was justified, Akorn’s shares dropped by 59%.3

Historical Challenges

Fresenius vs. Akorn is exceptional because it scuppered a significant acquisition, but the conditions which caused the deal to be called off are not unusual. Indeed, data integrity and validation continue to be a problem for many organisations in the sector, not least because ‘hybrid’ systems that exist half on paper and half digitally are still relatively common. The World Health Organisation’s guidelines say that these systems are discouraged, and migrating to a completely digital system should now be prioritised.4

Such moves, however, are perceived to be difficult and time-consuming. A typical facility will have many machines that generate data, usually developed by different companies using different recording techniques. Many machines will not come with software. Those that do will require validation – a systematic approach required to guarantee that any process in a pharmaceutical facility will operate within specific parameters when required. This process can take months to complete depending on the experience of those carrying out the validation and whether they are following specific procedures.

Some organisations offer integration of all instruments, but this usually falls short of ALCOA requirements and can drag out the process as individual assessments of each connection need to be tested. ‘Off the shelf’ software solutions are seen to be the preferred way to digitise paper-based approaches as they are tested more rigorously and simplify the validation process, even when connecting multiple machines and instruments.5

Beyond these practical challenges, businesses also have to contend with a complex regulatory landscape that continues to shift. To combat the recent spike in compliance failings, the World Health Organisation, UK Medicines and Healthcare Products Regulatory Agency (MHRA) and US Food and Drug Administration (FDA) have all issued guidance for maintaining data integrity. For manufacturers, these updates will also run alongside other guidance, such as GAMP® 5, GAMP® Guide on Records & Data Integrity, and the GAMP® RDI Good Practice Guides on Data Integrity issued by the International Society for Pharmaceutical Engineering, which defines good practice when using automated systems in the sector.

Emerging Problems

While regulators welcome the move from hybrid systems, businesses are still being flagged for data integrity shortcomings even when using newer digital systems. One of the biggest challenges that has emerged over the last twenty years relates to audit trails, particularly among companies that use software with audit trail functionality. There is a tendency to fit and forget without revisiting the data and understanding what’s being collected and why. This can pose challenges when work is being submitted to a quality group for review.

The age of some automated systems is another critical concern, particularly for drug manufacturers. As equipment reaches a certain age, hardware and software components inevitably fail or become obsolete – this not only jeopardises the validity of some data but also the required quality of a product. However, major retrofitting projects come with their own risks, and business owners are advised to proactively manage their systems to identify when components need replacing. This approach limits plant downtime and ultimately protects data validity, even as processing changes are made.

Ultimately, even the most conservative organisations will agree that data integrity can never be fully assured. It’s an ongoing process that has to be appraised at regular intervals. However, technology now exists to simplify the task, even as the volume of data collected increases.