How Robust Is Your Process Capability Program?

ISPE's Process Capability team has developed an industry-specific maturity model that can help companies design a robust process-capability program and compare it to those of their peers. The model has been substantiated by surveying 15 companies.

Process capability is an index that compares quantitative process variability to its specification limits over a predefined period. Typically, the higher the index, the tighter that process property has remained within its specifications.

There are different types of process-capability indices (Table A). Some predict future capability while others describe past performance. Some are based on long-term variability, others on short-term variability. While all serve the same high-level purpose, different indices are better suited to certain situations.1 ,2 ,3

| Capability Indices | Formula | Estimation of | |

|---|---|---|---|

| Process Capability: The 6σ range of a process’s inherent variation (short term) | |||

| Cp | Tolerance width divided by the short-term process variability, irrespective of process centering. | \( C_{p}=\frac{USL-LSL}{6 \sigma } \) | \( \sigma = R/d_{2} \) |

| Cpk | Capability index which accounts for process centering | \( C_{pk}=min \left[ \frac{USL- \mu }{3 \sigma },\frac{ \mu -LSL}{3 \sigma } \right] \) | |

| Process Performance: The 6σ range of a process’s total variation (long term) | |||

| Pp | Tolerance width divided by the long-term process variability, irrespective of process centering | \( P_{p}=\frac{USL-LSL}{6 \sigma } \) | \( \sigma =\sqrt[]{ \sum _{i=1}^{n}\frac{ \left( x_{i}-x \right) ^{2}}{n-1}} \) |

| Ppk | Performance index which accounts for process centering | \( P_{pk}=min \left[ \frac{USL- \mu }{3 \sigma },\frac{ \mu -LSL}{3 \sigma } \right] \) | |

- 1Breyfogle, Forest W. Implementing Six Sigma: Smarter Solutions Using Statistical Methods. New York: John Wiley & Sons, Inc., 1999.

- 2Engel, Richard. “Cp/Cpk vs. Pp/Ppk What Is the Diff erence? Which One Should I Use?” April 26, 2010. https://pt.scribd.com/document/144333937/cpk-vs-ppk-4

- 3Ramiez, B., and G. Runger. “Quantitative Techniques to Evaluate Process Stability.” Quality Engineering 18, no. 1 (2006): 53-68. http://www.tandfonline.com/doi/abs/10.1080/08982110500403581

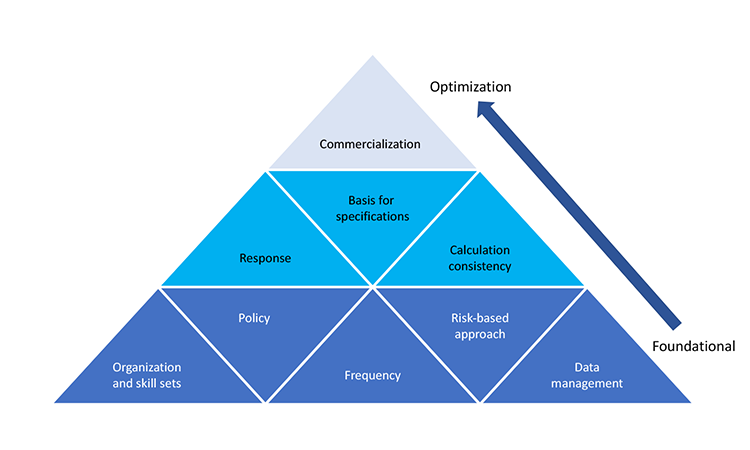

Process capability indices can help identify opportunities to improve manufacturing process robustness, which ultimately improves product quality and product supply reliability; this was discussed in the November 2016 FDA “Submission of Quality Metrics Data: Guidance for Industry.”4 For optimal use of process capability concept and tools, it is important to develop a program around them. We have identified nine areas that should be considered and for which a certain level of proficiency or understanding is recommended:

- Policy: A framework that provides direction and sets process-capability expectations in an organization

- Data management: A system for collecting, managing, and assessing data

- Frequency: How often process-capability indices are calculated

- Basis for specification: How specifications are developed and linked to clinical studies

- Calculation consistency: Process capability calculation methodology.

- Response: Thresholds that specify required action(s) and shift attention to low-capability products

- Organization skill set and execution: Process capability knowledge across the organization

- Risk-based approach: How process capability supports an overall risk-management program

- Commercialization: The stage at which product life cycle monitoring and variability sources are optimized

These focus areas have been assembled into the ISPE Process Capability Model (Figure 1). Foundational areas constitute the base of the pyramid, while more advanced areas geared toward manufacturing optimization constitute the second and third tiers. This identifies organizational strengths and weaknesses and helps prioritize efforts.

- 4US Food and Drug Administration. Guidance for Industry. “Submission of Quality Metrics Data.” November 2016. https://www.fda.gov/downloads/drugs/guidances/ucm455957.pdf

Each focus area has a five-stage maturity continuum: initial, repeatable, defined, managed, and optimizing. These are summarized in the tables at the end of this article. [Link to tables]

We do not recommend using process capability as a reportable compliance metric, due mainly to associated statistical issues and complexities. There is no industry consensus, for example, on how specifications should be set. Process capability values could therefore differ between two pharmaceutical companies that use the same manufacturing process to produce the same product; specifications established and negotiated with regulatory agencies could be different.

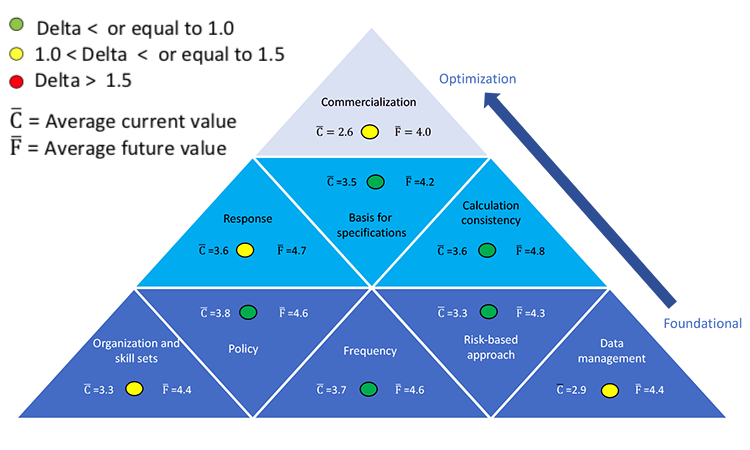

We conducted a survey asking companies to rate their organizations on a scale of 1 (basic) to 5 (advanced) in each area, both as they are today (current state) and as they intend to be in 2–3 years (future state). This survey may help the biotech and pharmaceutical industries to:

- Increase familiarity with process capability, enable a process improvement mind-set, and socialize the process-capability model

- Assess the efficiency and effectiveness of process-capability program

- Develop a consistent approach for calculating process-capability indices, and conduct a systematic assessment to measure and compare process-capability maturity within and across organizations

- Determine which level of maturity is appropriate for an organization based on its business needs and desired risk profile

The results of that study are shown in Figure 2. Colors indicate the gap between the current state (delta) and the desired future state (2–3 years away); green represents the smallest difference and red the greatest. Users can focus on foundational areas first, then shift to optimization areas.

Demographics

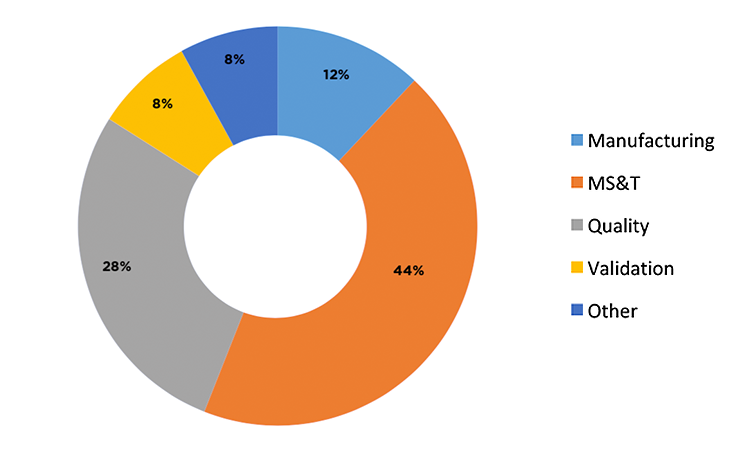

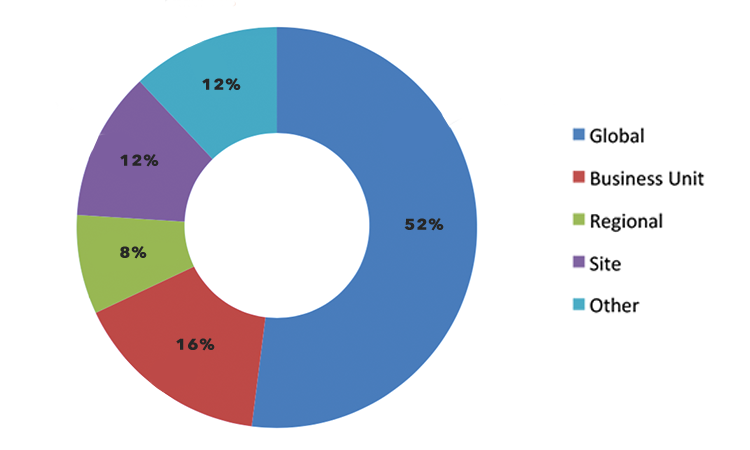

There were 15 respondents from 11 “big pharma” companies (annual sales > $1 billion) whose level of understanding using process-capability indices, level of involvement, and statistical understanding were rated as good to excellent. Of these respondents, 53% used Cpk for calculating process capability, 27% used Ppk, and 20% used both. The demographics of the 15 respondents are further described in Figure 3 and Figure 4.

Participants measured process capability for between 3 and 15 years; all reported a minimum of 2 years to see benefits.

Policy

The goal of a global procedure is to define process-capability standards regarding the application scope, capability calculation, and response to low-performing processes.

On a scale of 1 to 5, survey participants rated their current state on average at 4.2, indicating that process capability SOPs exist globally or at a business unit level, capability analysis is done for the product portfolio, and a response is defined for low-performing products.

Some respondents stated that their process-capability program began several years ago and according to a respondent, now include “… all drug substances and products, global, includes third parties, fairly matured, structured process for years.” For those in an earlier phase of the journey, as one respondent indicated, “policy expectations are defined, [but] contract manufacturers may not be up to speed yet.”

Because of the small body of data available, companies typically struggle to include products from development. Some respondents in commercial manufacturing recommended starting with control charts for all products, and expanding later to evaluate process capability.

Participants clearly recognized the benefits: “Because of the procedures we have in place to address low-capability products, we have seen our capabilities rise over the years, greatly reducing our number of out of specifications (OOSs).”

When asked where they would like their program to be in 2–3 years, the average response was 4.9. To achieve this level of improvement, process capability should be evaluated not only at internal manufacturing sites, but also at contract manufacturers, testing laboratories, and in development.

On their journey to achieve this higher level of maturity, some survey respondents expressed the intent to extend their capability analysis to low-volume products, as well as older and local market products. Once a low-performing product is identified, process issues must be differentiated from testing issues. Cpk and Ppk indices (probabilistic measures) should also be compared to the actual OOS rate.

Several opportunities for further development were also mentioned. One discussed the scope of variables, saying “Go beyond quality control data and find additional leading indicators of potential batch rejections.” Another comment said that process capability may be just one of several indices for monitoring: “[Craft] a comprehensive quality scorecard for the product with more than Ppk.”

Data Management

Data management is a foundational element of the process-capability pyramid, and it must be addressed in a satisfactory manner to reap its benefits. This seemed to be well understood by survey respondents, as this area showed the largest gap between the current and desired future states.

The goal of data management is to:

- Capture, organize, control, and distribute product and process data across organizational boundaries

- Support collaboration and decision-making among strategic partners, suppliers, and customers

- Make on-spec product in a reliable and efficient manner

Data gathering and management can be arduous if the data are recorded on paper-based systems (e.g., batch records, certificates of analysis, printouts) and then transcribed into a secure electronic database. This process is prone to errors and requires that the data integrity be checked.

On a rating scale of 1 to 5, survey participants rated themselves an average of 3.1 for this area. At this level, databases are structured consistently across products and across sites; data compilation is in part manual and in part automated. Participant comments included: “Some databases are structured; some are manually entered/updated. Network roll-up not available (in some cases 100% manual). Product-specific databases may or may not be automated.”

When asked where they would like their data management programs to be in 2–3 years, the average response was 4.5. To accomplish this, manual processes that require data verification must evolve to automated processes that include data integrity authentication. Unfortunately, pharmaceutical data management has not kept pace with industry changes and expansions over the past three decades. Local systems are still being used, even though a global system would allow data aggregation and comparison within and between product groups. It is difficult to make real-time decisions or obtain information on demand using slow off -line systems.

As pharmaceutical and biologics companies become virtual, using contract manufacturing organizations and contract laboratories, linking external sources will become more critical.

Frequency

As an organization begins to develop a process-capability program, it must determine how often process capability should be calculated, and who should be involved in the review and discussion of those indices. Capability index calculation requires a minimum number of data points; statisticians often recommend 20 or more if the results are to be meaningful. Hence, process-capability indices that measure lot-to-lot variability cannot be calculated too early in the development process or the start of commercial manufacturing.

The minimum frequency is annual, given the regulatory requirement of assembling annual product reviews (APRs), which typically report process-capability indices, assuming that the number of available data points is sufficient. Process capability calculations also support continued process verification (CPV) programs, so the frequency of the calculation may also be set by a company’s CPV program.

At the other end of the spectrum, capability indices could theoretically be recalculated with every new batch, although this is unlikely to yield significant additional information unless a dramatic change occurred with the last batch. Other tools, such as process control charts, may be more useful for detecting minor within-or between-batch changes. Some survey responses below indicated that the more robust an organization’s product and processes are, the less frequently they conduct capability calculations.

“Current frequency is annual. Implementing quarterly program based on risk. If PPK is > 1.3 will evaluate annually.”

—Survey Participant

Some respondents calculate process capability for all batches manufactured as part of a campaign, which allows them to compare product and process performance across campaigns. One respondent indicated that “[I]f a process change has been made, it may be wise to increase the frequency with which process capability is calculated to ensure that the change is not resulting in unintended situations. Once that concern has been alleviated, the frequency of calculation of process capability can be reduced.” Another said that “[F]or products for which very few batches are manufactured, they will calculate process capability less frequently due simply to the scarcity of the data.”

Because process-capability indices are simple performance measures that allow organizations to monitor how the robustness of a product or a process evolves over time (from one campaign, year, or month to the next), they are excellent tools that can inform both technical and nontechnical managers in their resource-allocation decisions for continuous-improvement projects.

Clearly, there is no single way to set the frequency with which capability indices should be calculated. At the same time, it is apparent that criteria or rules for that purpose should be defined and used consistently across an organization.

For this area, respondents rated themselves 3.7 on average, indicating that process capabilities are periodically calculated, summarized, and shared with site leadership (although the frequency of calculation may vary from site to site), and the results are used to drive continuous improvement efforts.

Respondents also indicated that in 2–3 years they expect to reach an average maturity level of 4.5. At that level, the frequency with which process capability is calculated has been standardized across the organization manufacturing network. Process capabilities, in conjunction with a suite of other statistical analysis tools, are used continuously by all levels of the organization to track and communicate process performance and drive continuous improvement.

Basis For Specification

A consistent basis for specification is essential for comparing process capabilities across sites, manufacturers, etc. The concept of process capability rests on the comparison of actual manufacturing results to a meaningful specification range. In their purest form, drug-product specifications should represent the needs of the patients receiving them.

To set specifications for pharmaceutical products based on patient needs, however, clinical experience would have to cover relatively broad ranges for each product attribute. This kind of clinical data is rarely available, unfortunately. In its absence, manufacturers rely on an assortment of other approaches, including assessments of achievable variation based on process development experience

and analytical method performance, USP compendia specifications where available, specifications established for similar products manufactured with the same technology platform, bioequivalence studies, and previous manufacturing history.

The latter is the most challenging situation for process capability. Since most process-capability indices are based on a ratio of the process-variation range to the specification range, when specifications are based on process variation (commonly referred to as “process-capability specifications”), the calculation becomes circular. Process capability will inevitably fall close to 1.0, since both the numerator and denominator are based on process variation. To avoid this, the regulatory agency (often driven by risk consideration) may request tighter limits based on the available test data during the approval process. These would automatically result in process capabilities lower than 1.0.

Survey participants rated their current state of specification bases at an average of 3.5. This indicates that, where possible, specifications are based on knowledge of the product attribute ranges necessary to achieve safety and efficacy. Participants cited examples of the current mix of bases for specification setting (below). This shows that when specifications are based on previous manufacturing history, it will inevitably fall close to 1.0.

When asked where they would like their program to be in 2–3 years, the average response was 4.2. Some participants provided examples of mature specification-setting approaches already in place, including: “Specs linked to quality target product profile.”

The quest for more clinically relevant specifications is the subject of a newly formed ISPE team called the Clinically Relevant Specification Work Group. When consistently set, specifications that truly represent patient needs open the possibility for meaningful process-capability comparisons as envisioned in the FDA’s quality metrics draft guidance. Without universal specifications for a given product, comparisons of process performance across sites or manufacturers remains a challenge.

Calculation Consistency

On average participants rated themselves 3.6 for their current state; this reflects consistent use of a process-capability approach, calculations, and metrics across an organization. At this level, methodologies to outline data set size, confidence limits, number of batches, effect of normality, and indication for using qualitative vs. quantitative results are documented in guidelines or SOPs across at least part of the organization (local, regional, and/or global).

Organizations chose to use short-term (Cpk) or long-term capability indices (Ppk) and control charts to monitor their processes. Of the 15 survey participants, eight used Cpk, four used Ppk and, three used both Cpk and Ppk, depending on the situation. The key is to be consistent in the calculation and use of such metrics.

When respondents were asked how much further they would like to take their programs in 2–3 years, the average rating was 4.8. At that stage, capability results are used to set long-term product and process robustness improvement strategies, which are integrated into the organization’s culture and management review processes. According to one respondent, “We are working on site harmonization around using consistent metrics, implementation of remediation plans, and identification of future process-capability opportunities.”

Response

The response section maturity model is intended to identify events that trigger a response, the consistency of the response when those events occur, how well events and responses are aligned across products and sites, and the extent to which leading indicators and/or combinations of events are used to evaluate whether an event has occurred. An “event” is defined as an abnormal shift, trend, or low Cpk.

Maturity of the response portion can mean responding to failures, responding to both favorable and unfavorable changes in process capability, or responding to indications of a potential event based on leading indicators (e.g., comparing the predicted failure rate to actual failures). Maturity is also affected by the degree to which the effectiveness of the action is verified (e.g., ad hoc or no effectiveness check for a single event for a single critical quality attribute (CQA), or measuring effectiveness checks for all sites and all relevant products based on a specific event).

A robust response results in action based on established process or product thresholds, with resources and improvement activities for products with poor process capability. This consistent, focused response results in continuously improving process capability.

Survey responses for current-state maturity averaged 3.5 (defined-managed). Respondents reported that Cpk or Ppk are routinely calculated for CQAs and some other parameters such as process parameters or raw material attributes; this practice is not in place at all sites, however. At this level, the parameters, attributes, and/or CQAs where process capability is measured can also vary. Sites with response systems at this maturity level show a measurable influence on process capability. According to one survey respondent, “If Cpk < 1.33, it is discussed. Cross-functional team to understand ‘why’ are formed and plans are developed. Heads of quality or technical services will weigh in on Cpk < 1.0 … 95%–98% of Cpk are > 1.3.”

Average future-state response maturity was 4.7 (managed-optimizing), indicating that companies believed that significant benefits would result from efforts to set consistent standards, respond to process-capability signals, report progress, and measure effectiveness across products and sites. More extensive and effective use of leading indicators with comparison to actual results was also desired. “Being able to predict problems before they occur results in significant business benefit,” explained one respondent.

Organization Skill Set And Execution

Survey participants rated themselves an average of 3.3 at current state, indicating that respondents found that their respective organizational structures suitably designed and staffed to collect, compile, and analyze process-capability information and signals; make recommendations; and take timely action. Roles and responsibilities are clear, associates experienced in determining process capability, and expert statistical support are readily available. Process capability programs have been in place for 3–15 years on average, an indication of the time typically required to achieve this level of organizational proficiency.

When asked to describe their future state organization and skills, the average response was 4.4. To achieve this level of maturity, process-capability activities must be well-defined for key business processes (e.g., technology transfer, CPV). Process capability knowledge must be pervasive and integral. Importantly, management fully embraces process capability to drive process robustness improvements and create value for the organization.

Comments indicated that as SOPs and organizations are created and training is provided, a culture change begins in which process capability becomes a standard approach to conducting business. Comments also emphasized the need for management support.

Risk Based Approach

A risked-based (e.g., ICH Q9) approach to process capability prioritizes and applies resources where they are needed most to enhance patient safety, guarantee compliance, ensure efficient use of resources, , and drive business value.

Participants rated themselves an average of 3.3 at current state. This means process-capability approaches, policies and SOPs are risked-based, in place, and mostly in use across the organization. Risk-management tools are also in use and are well defined. These approaches strengthen the organization’s compliance record and align with the FDA’s “Pharmaceutical cGMPs for the 21st Century, A Risk-Based Approach.” 5

When asked where they would like their program to be in 2–3 years the average response was 4.2. To achieve this level, use of risked-based context must be applied consistently across the entire organization; increased proficiency in the use of risk-based approaches should also be demonstrated. Process capability monitoring must be aligned with the risk of processes performance. Business value is derived from the use of process capabilities at this maturity level.

Comments from respondents indicate that the use of process capabilities often start with a set frequency. As process-capability programs mature, the higher the risk, the more frequent the monitoring. Comments also indicate that risk analysis is used to prioritize processes to be improved upon, and can include more than just capability data.

Commercialization

Prior to process validation, scientific evidence must establish that the process is capable of consistently delivering quality product. The commercial process control strategy is defined in Stage 1, process design, and based on knowledge gained during development activities. Development data collection and evaluation is focused on process understanding, often including data from operating the process at extreme ranges to determine the relationship between operating parameters and quality attributes. Calculating process-capability indices at this stage may provide limited benefit because of the forced variation. In addition, at this stage of development, few runs at normal operating conditions have typically been completed (< 10). This can lead to considerable uncertainty in the process-capability estimate.

Evaluation of the process control strategy occurs during Stage 2, process qualification/validation. As appropriate and with proper scientific oversight, Stage 1 and 2 data may be combined to assess process capability. In Stage 3, continued process verification, a product-and process-performance program—including process-capability indices—can provide assurance that the process remains in a state of control and identify opportunities for continuous improvement.

Survey participants rated their current state an average of 2.6. This indicates that while the control strategy is sufficiently robust for validation purposes, the commercialization site may need to allocate resources to mitigate the risk of variability not apparent during development. Participants rated their future state at 4.0, on average. This indicates a desire to further optimize the control strategy during development and possibly find ways to enhance the use of process-capability indices at that stage of the product life cycle. Both averages (current and future) are the lowest observed in the survey. This is not unexpected as the commercialization area is the highest level on the process-capability pyramid (Figure 1).

Conclusion

This paper introduced a process-capability maturity model with nine focus areas specific to the pharmaceutical and biotech industry. It also establishes a hierarchy of needs among those nine areas. Pharmaceutical and biotech executives that wish to assess whether and how process-capability indices may be used in their organizations may find this framework useful.

We conducted a survey in which participant companies were asked to rate their organizations on a scale of 1 to 5 in each of the nine areas: as they are today (current state) and as they intend them to be in 2–3 years (future state). On average, respondents indicated that their current state is close to the desired future state in five of the nine areas (policy, frequency, risk based approach, basis for specification and calculation consistency). There are four areas where on average respondents wish to continue to improve their capabilities (organization and skillsets, data management, response and commercialization). Among those four, two are foundational areas that should be addressed first (organization and skill sets, data management).

In general, respondents believe that such programs will help their companies comply with key regulatory requirements (process validation, process control) and improve the business bottom line by tracking and communicating process performance effectively; this, in turn, will drive continuous improvement.

Survey responses also indicated that process-capability index may not be a stand-alone program, but rather be integrated with key elements of a quality-management system such as APRs and various process-monitoring efforts in the context of a CPV program.

Last, but not least, respondents also emphasized the need for engagement of key functional areas such as technical services, quality and compliance, R&D, and product development for a successful process-capability program that promotes a culture of continuous improvement.

- 5US Food and Drug Administration. Guidance for Industry. “Pharmaceutical cGMPS for the 21st Century—A Risk-Based Approach.” Final Report. September 2004. https://www.fda.gov/ohrms/dockets/ac/03/briefing/3933B1_02_Pharmaceutical%20cGMPs.pdf

Process Capability Maturity Models

This information was first presented at the ISPE Annual Meeting & Expo, 8–11 November 2015, and was updated in 2016 and 2017.

| Initial |

|

|---|---|

| Repeatable |

|

| Defined |

|

| Managed |

|

| Optimizing |

|

| Initial |

|

|---|---|

| Repeatable |

|

| Defined |

|

| Managed |

|

| Optimizing |

|

| Initial |

|

|---|---|

| Repeatable |

|

| Defined |

|

| Managed |

|

| Optimizing |

|

| Initial |

|

|---|---|

| Repeatable |

|

| Defined |

|

| Managed |

|

| Optimizing |

|

| Initial |

|

|---|---|

| Repeatable |

|

| Defined |

|

| Managed |

|

| Optimizing |

|

| Initial |

|

|---|---|

| Repeatable |

|

| Defined |

|

| Managed |

|

| Optimizing |

|

| Initial |

|

|---|---|

| Repeatable |

|

| Defined |

|

| Managed |

|

| Optimizing |

|

| Initial |

|

|---|---|

| Repeatable |

|

| Defined |

|

| Managed |

|

| Optimizing |

|

| Initial |

|

|---|---|

| Repeatable |

|

| Defined |

|

| Managed |

|

| Optimizing |

|